June 25, 2025

Building a Robot Librarian: My Journey with the BNL Project in Luxembourg

Earlier this month, I had the opportunity to travel to Luxembourg to participate in a demo day for the BNL Project - an ambitious initiative to build a robot librarian. The concept was simple but technically challenging: a robot that autonomously moves through a library, scans books on the shelves, and detects whether each book is correctly placed.

As the lead for the computer vision system for this project, my role was to make this possible. It meant developing the robot’s “eyes” and “brain” - to recognize books, identify them, and track their order. But things didn’t exactly go as planned. What followed was a rollercoaster of challenges, long nights, and last-minute innovations.

The Plan: Data Matrix Meets AI Vision

Our system was designed around a hybrid approach:

- Each book had a Data Matrix code (a small 2D barcode) on its spine for fast, unique identification.

- For robustness, we also implemented visual detection using SIFT (Scale-Invariant Feature Transform) and a combination of LBP, Gabor filters, and HOG descriptors. This would help identify books based on their spines, even without clear codes.

The robot would move along the shelves, capture images, detect books using AI, read their codes or visual features, and log any misplaced or missing items.

Reality Hits: Camera Trouble

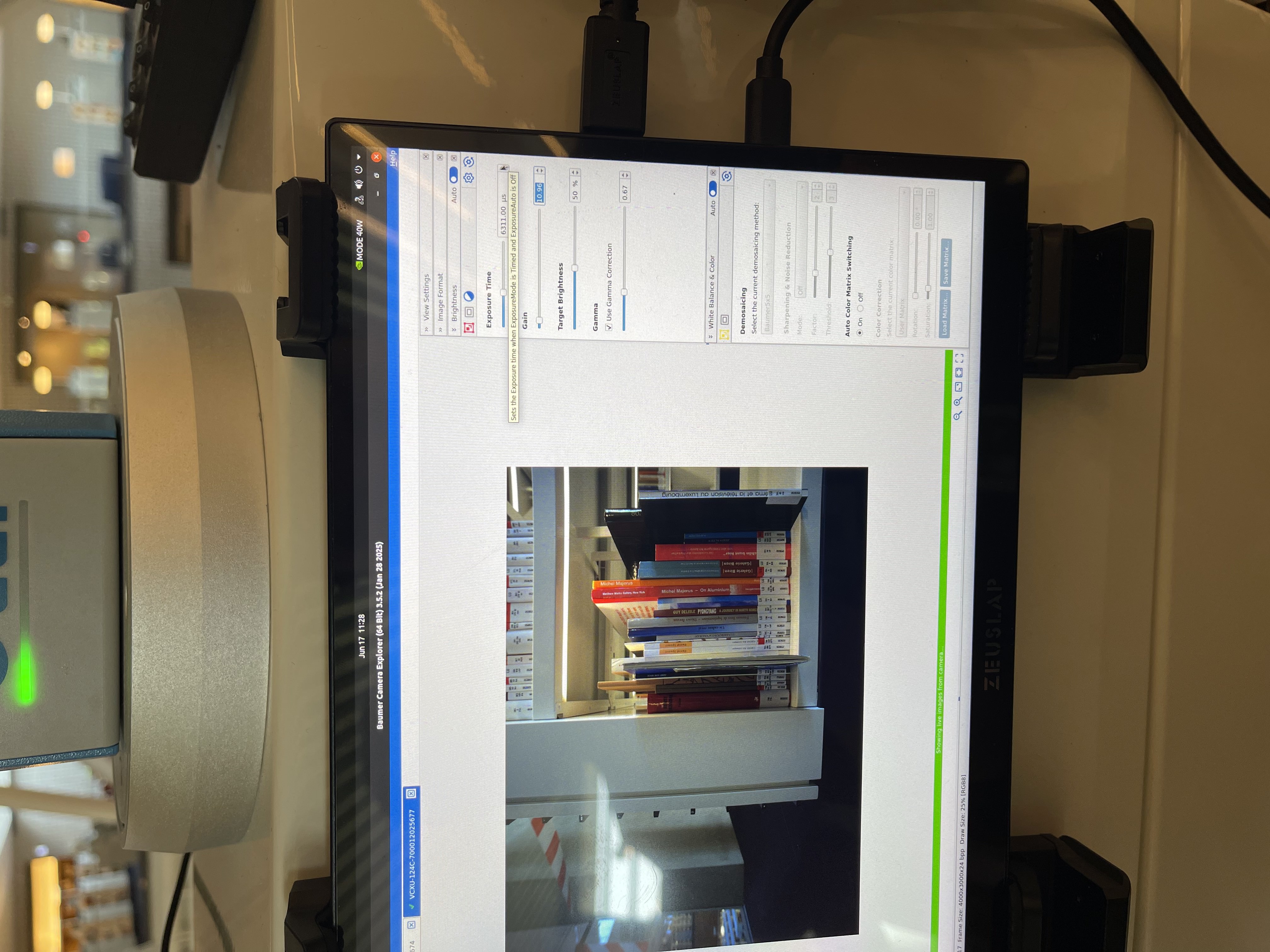

But reality quickly tested our system. The camera hardware we had - while professional - proved problematic:

- The lens quality and low-light performance weren’t ideal. Images were often darker than normal, making it difficult for our AI models to detect book spines reliably.

- There was also a focus issue, especially with the Data Matrix codes. The codes appeared blurry, inconsistent, or unreadable.

This created a huge risk: If the robot couldn’t read the code or detect the book, it couldn’t do its job.

AI Confidence: Playing with the Threshold

Due to poor lighting and inconsistent image quality, our AI model started failing to detect books in certain conditions. One of my key tasks became tuning the confidence threshold of the detection algorithm.

By lowering the threshold slightly, I was able to increase the model’s tolerance to darker or noisier images. It was a delicate balance - too low, and false positives flooded in; too high, and we missed books entirely. In the end, it became a strategic tradeoff to keep the system functional in less-than-ideal imaging conditions.

Imaging on the Move

Another unexpected challenge was capturing clean images while the robot was moving. Because the shelves were scanned in motion, there was a high risk of motion blur, especially with poor lighting.

To solve this, we had to:

- Dynamically adjust camera settings - like exposure time, gain, and shutter speed - on the fly.

- Fine-tune the balance between brightness and sharpness so we could freeze motion without sacrificing visibility.

- Optimize when and how the robot should trigger a frame capture to maximize clarity.

It required deep understanding of both the robot’s behavior and the camera’s hardware constraints.

Demo Day: From Panic to Prototype

Despite these obstacles, I pressed on. When we couldn't fix the camera focus issue for the Data Matrix, I made the decision to fall back entirely on the visual recognition algorithms: SIFT, LBP, Gabor, and HOG-based search.

Most of that work happened overnight, with very limited real-world testing. I was essentially coding blind, relying on my experience and simulated data to design the fallback logic.

And yet - on demo day, it somehow worked.

The robot navigated the shelves, scanned books, and identified some correctly placed and misplaced items. The performance wasn’t perfect, but it was a solid proof of concept that impressed the stakeholders and proved the viability of our vision.

Lessons Learned

This project was more than just a technical challenge - it was a crash course in real-world robotics and AI deployment. Some key takeaways:

- Hardware limitations can derail even the best algorithms.

- Flexibility and fallback strategies are essential in robotics.

- Tuning AI models to match real-world variability is more of an art than a science.

- Camera tuning (exposure, gain, shutter speed) becomes critical when working in dynamic environments with moving robots.

Above all, it reminded me that in robotics, perfection isn’t the goal - resilience is.